March 5, 2019 feature

Generating cross-modal sensory data for robotic visual-tactile perception

Perceiving an object only visually (e.g. on a screen) or only by touching it, can sometimes limit what we are able to infer about it. Human beings, however, have the innate ability to integrate visual and tactile stimuli, leveraging whatever sensory data is available to complete their daily tasks.

Researchers at the University of Liverpool have recently proposed a new framework to generate cross-modal sensory data, which could help to replicate both visual and tactile information in situations in which one of the two is not directly accessible. Their framework could, for instance, allow people to perceive objects on a screen (e.g. clothing items on e-commerce sites) both visually and tactually.

"In our daily experience, we can cognitively create a visualization of an object based on a tactile response, or a tactile response from viewing a surface's texture," Dr. Shan Luo, one of the researchers who carried out the study, told TechXplore. "This perceptual phenomenon, called synesthesia, in which the stimulation of one sense causes an involuntary reaction in one or more of the other senses, can be employed to make up an inaccessible sense. For instance, when one grasps an object, our vision will be obstructed by the hand, but a touch response will be generated to 'see' the corresponding features."

The perceptual phenomenon described by Dr. Luo typically occurs when a perception source is unavailable (e.g., when touching objects inside a bag without being able to see them). In such situations, humans might "touch to see" or "see to feel," interpreting features related to a particular sense based on information gathered using their other senses. If replicated in machines, this visual-tactile mechanism could have several interesting applications, particularly in the fields of robotics and e-commerce.

If robots were able to integrate visual and tactile perception, they could plan their grasping and manipulation strategies more effectively based on the visual characteristics of the objects that they are working with (e.g. shape, size, etc.). In other words, robots would perceive the overall tactile properties of objects before grasping them, using visual information gathered by cameras. While grasping an object outside of the camera's field of view, on the other hand, they would use tactile-like responses to make up for the the lack of visual information available.

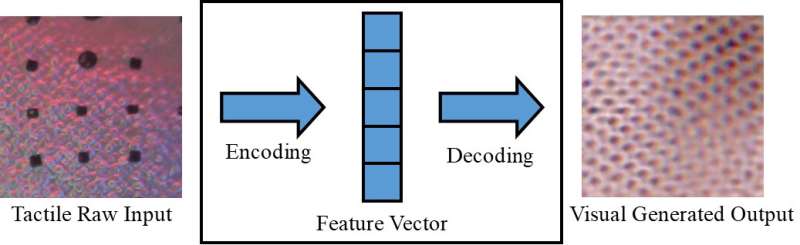

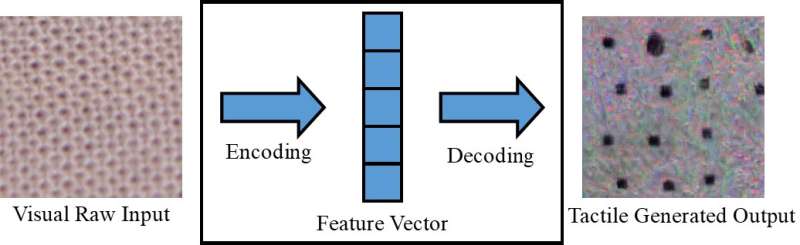

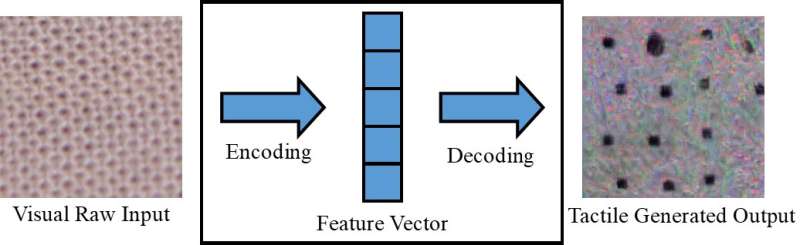

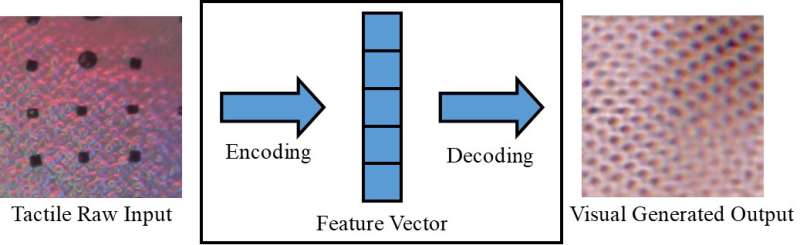

If paired with a tactile device, which has not yet been developed, the framework proposed by Dr. Luo and his colleagues could also be used in e-commerce, for instance, allowing customers to feel the fabric of clothes before purchasing them. With this application in mind, the researchers used conditional generative adversarial networks to generate pseudo visual images using tactile data and vice versa (i.e. to generate tactile outputs using visual data).

"In online marketplaces, customers shop by viewing pictures of clothes or other items," Dr. Luo said. "However, they are unable to touch these items to feel their materials. Feeling an item is quite important while shopping, particularly when buying delicate items, such as underwear. Allowing users to feel items at home, using a tactile device that is yet to be developed, the cross-modal sensory data generation scheme proposed in our paper can help e-commerce customers to make more informed choices."

Dr. Luo and his colleagues evaluated their model on the VITac dataset, which contains macro images and tactile readings (captured using a GelSight sensor) of 100 different types of fabric. They found that it could effectively predict sensory outputs for one sense (i.e. vision or touch) using data relevant to the other.

"We take texture perception as an example: visual input images of a cloth texture are used to generate a pseudo tactile reading of the same piece of cloth; conversely, tactile readings of a cloth are employed to predict a visual image of the same cloth," Dr. Luo explained. "The textures of fabrics, i.e., the yarn distribution patterns, appear similarly in a visual image and a pressure distribution (i.e. tactile) reading. However, this work can also be extended to achieve cross-modal visual-tactile data generation for the perception of other object properties, by considering the differences between the two domains."

The study carried out by Dr. Luo and his colleagues attained remarkable results in generating realistic tactile and visual patterns for different fabrics in the absence of tactile or visual information, accordingly. Using their framework, the researchers successfully 'replicated' tactile elements of fabrics using visual data, and vice versa.

"To our best knowledge, this work is the first attempt to achieve robotic cross-modal visual-tactile data generation, which can also be extended to cross-modal data generation for other modalities," Dr. Luo said. "The practical implications of our study are that we can make use of other senses to make up an inaccessible sense."

In the future, the framework proposed by Dr. Luo and his colleagues could be used to improve grasp and manipulation strategies in robots, as well as to enhance online shopping experiences. Their method could also be used to expand datasets for classification tasks, by generating sensory data that would otherwise be inaccessible.

"In future research, we will try to apply our method to a number of different tasks, such as visual and tactile classification in a real world setting, with objects that vary in appearance (e.g. shape, color etc.)," Dr. Luo said. Furthermore, the proposed visual-tactile data generation method will be used to facilitate robotic tasks, such as grasping and manipulation."

The paper outlining this recent study, pre-published on arXiv, will be presented at the 2019 International Conference on Robotics and Automation (ICRA), which will take place in Montreal, Canada, between the 20th and 24th of May. At the conference, Dr. Luo will also be running a workshop related to the topic of his study, called "ViTac: Integrating vision and touch for multimodal and cross-modal perception."

More information: "Touching to see" and "seeing to feel": robotic cross-modal sensorydata generation for visual-tactile perception. arXiv:1902.06273 [cs.RO]. arxiv.org/abs/1902.06273

© 2019 Science X Network